Mean absolute scaled error

In statistics, the mean absolute scaled error (MASE) is a measure of the accuracy of forecasts . It was proposed in 2006 by Australian statistician Rob Hyndman, who described it as a "generally applicable measurement of forecast accuracy without the problems seen in the other measurements."[1]

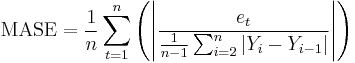

The mean absolute scaled error is given by

where the numerator et is the forecast error for a given period, defined as the actual value (Yt) minus the forecast value (Ft) for that period: et = Yt − Ft, and the denominator is the average forecast error of the one-step "naive forecast method", which uses the actual value from the prior period as the forecast: Ft = Yt−1[3]

This scale-free error metric "can be used to compare forecast methods on a single series and also to compare forecast accuracy between series. This metric is well suited to intermittent-demand series because it never gives infinite or undefined values[1] except in the irrelevant case where all historical data are equal.[2]

See also

References

- ^ a b Hyndman, R. J. (2006). "Another look at measures of forecast accuracy", FORESIGHT Issue 4 June 2006, pg46 [1]

- ^ a b Hyndman, R. J. and Koehler A. B. (2006). "Another look at measures of forecast accuracy." International Journal of Forecasting volume 22 issue 4, pages 679-688. doi:http://dx.doi.org/10.1016/j.ijforecast.2006.03.001

- ^ Hyndman, Rob et al, Forecasting with Exponential Smoothing: The State Space Approach, Berlin: Springer-Verlag, 2008. ISBN 978-3-540-71916-8.